The AI Escape Room: MCP as a Game Engine

Itai Reingold-Nutman

・

Aug 6, 2025

Introduction

Tadata typically builds tech to help companies launch Model Context Protocol (MCP) servers.

In our spare time, however, we've played around with using the MCP in a more unique way to power an escape room game. This “MCP Game”, enjoyable to create and play, offered interesting lessons about the evolving role of MCPs in the world.

What Exactly is an "MCP Game"?

The core idea for an MCP Game sparked from a realization: any game, when powered by a large language model (LLM), can now be infused with new life and capabilities thanks to the MCP. Previously, creating an LLM-based game that could interact with a dynamic world, execute actions, and change states required individual integrations. The MCP fundamentally alters this and simplifies the development of these games.

Our specific creation is an escape room. What better way to demonstrate the thrill of this new genre of “MCP Games” than by trapping players in a virtual room, challenging them to prompt the LLM to take actions that lead to their escape? It's a fun, immersive experience where you're truly in control.

How It Works

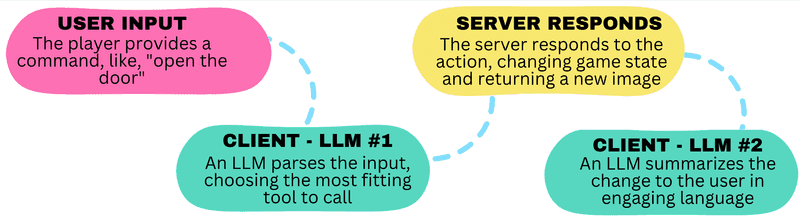

After the game is initialized, here's a high-level pipeline of what happens:

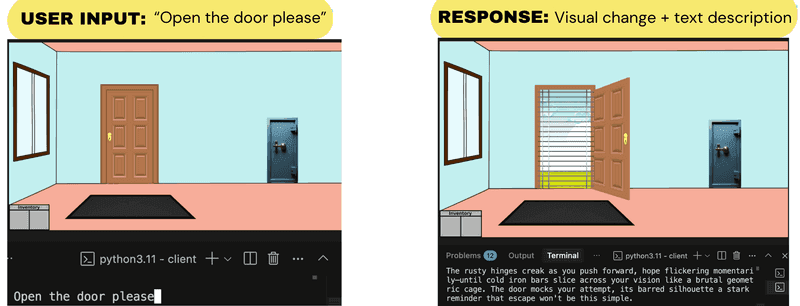

Player Input: A user provides a query in a specific room, for example, "open the door."

Client-Side Tool Selection (LLM1): That query is sent to an initial LLM residing within the client. Along with a system prompt and a list of available tools (e.g., open_door, look_under_rug), this LLM decides which tool/action is most relevant. (We've also incorporated tools like impossible_action and multiple_actions to handle edge cases where a single, valid action isn't requested by the user.)

Server-Side Execution: The client then executes this tool call, which triggers the server to perform the necessary changes to its internal game state. The server then generates a new image reflecting the updated game state and returns that image along with a factual description of what occurred (e.g., "You discovered a set of bars behind the door").

Client-Side Response Enhancement (LLM2): The client receives these factual changes. It then sends these changes, along with another system prompt and the user's initial query, to a second LLM. This LLM's role is to craft a natural-language sentence that summarizes the changes in an engaging and atmospheric way for the user.

Both the client and server are written in Python. The server leverages FastAPI, which was transformed into an MCP server in just a few lines of code using the FastAPI-MCP open-source library. Communication between the client and server utilizes the Streamable HTTP transport.

Why Context Curation Was the Toughest Design Challenge

One of the most intriguing takeaways was the constant tension of what information to expose to the LLM. We want the LLM to be as context-aware as possible so its responses feel natural and smooth. So, we tried "dumping" everything to both LLM calls: available tools, full conversation history, LLM role context, room/state details, and even the path to success. We assumed that with a sufficiently stringent prompt discouraging hints or tool suggestions, the LLM would use this context appropriately to make the game more engaging and realistic, yet still challenging.

Unfortunately, that wasn't the case. We quickly learned that as long as the LLM possessed even basic information like available tools, let alone the solution path, it would inevitably lean towards offering a "helping hand," even when unrequested (e.g., "that didn't work, perhaps try X").

Since no prompt was strong enough, this forced us to carefully curate LLM input; limiting the LLM's knowledge and context was the only way to curb its helpfulness. At one point, we went too far in this direction, stripping the LLM's role to just tool selection (LLM1), and replacing LLM2 with predetermined outputs. However, with rigid responses, the presence of the LLM vanished, and the game was frustrating to play. In 2025, people expect chat games to have the same dynamic nature as their chats with ChatGPT and Claude.

Our current iteration strikes a balance: LLM2 still enhances responses after state changes, but its power is dramatically limited. It receives only the user's input, the selected tool, the factual state change, and a simple system prompt for engaging output. No game context, history, past actions, or tool/room state is provided.

Take-Away for MCP Builders: When companies expose their software as an MCP and choose which endpoints to expose or hide, they must consider all the unintended ways LLMs might interpret and use that information. Deciding what tools, resources, prompts, and general context to provide an LLM is arguably the most significant challenge companies are currently facing. You want LLMs to have enough information to be helpful, but not so much that they cause unintended damage, become overwhelmed by irrelevant data, or leak sensitive information. This game serves as a microcosm of a much larger set of problems that companies and developers will need to solve in the coming years.

We Unlocked Game Control by Building a Custom Client

Another fascinating challenge in building this project was the development of our own MCP client. Since the release of the MCP, most of the industry's focus has been on creating servers that perform cool functions. The assumption is that a pre-existing client (like Claude's desktop app or Cursor) will always provide a meaningful way for users to interact with these servers.

Often, this assumption is true. But, in unique cases, like building a game, a custom client becomes important. The client was founded on the typical MCP client responsibilities of managing the LLM and executing the needed MCP tool calls. However, by building our own client, we obtained more freedom. We could write specific prompts for the LLM calls (and in fact, the current iteration of the client has 3 different prompts given to LLM2, depending on what tool was called). Additionally, we could add restrictions, like allowing for just 1 tool call per user input. Building out our own MCP client was essential to truly control the game flow and user experience.

Again, this relates to an interesting broader question. As companies begin to build MCPs that expose their software to LLMs, they will naturally grapple with questions like:

What information do we provide to the LLMs?

How will the LLMs use this information?

What guardrails do we need to implement?

Much of the answer lies on the server side, in choosing which endpoints to expose and building those endpoints in a robust and secure manner. But this project does highlight the utility of the other end of the model context protocol: the client.

Take-Away for MCP Builders: If you need complete control over how an LLM uses your tools (to enforce specific sequences of actions, add confirmation steps for sensitive operations, fine-tune prompts, etc. ), building a custom MCP client can be a game-changer. However, it does come with extra development overhead and complications, and for many use cases, existing clients like Claude or Cursor will do the job just fine!

Conclusion

The MCP Game started as a side project, but it taught us real lessons about context control, custom clients, and the quirks of LLM behavior. It’s a tiny escape room with big implications for MCP builders. Now go play, enjoy the game, and see how fast you can break out.

The Hosted Game: Play the game and tell us how it goes!

The MCP Game: If you're interested in playing our console version, exploring its code in more depth, or expanding on it with new levels.

FastAPI-MCP: The open-source repo we used to develop this game; if you're developing anything MCP-based yourself, it may prove helpful.