Tavily Case Study: Accelerating Developer Success with Tadata's MCP

Itai Reingold-Nutman

・

Jul 15, 2025

Tavily launched an MCP server that acts as a Tavily Expert, guiding coders and vibe coders alike to a successful Tavily implementation.

The Opportunity of Product-Led Growth

Tavily, a leading provider of a search API for AI applications, thrives in a product-led growth (PLG) environment. They serve hundreds of thousands of users who make millions of API calls daily.

Unlocking Faster Developer Adoption and Supporting Vibe Coders

While Tavily already offered high-quality documentation and an intuitive developer experience, they saw an opportunity to help developers succeed even faster, especially when using AI-assisted tools like Cursor.

They also recognized that vibe coders were relying on the AI IDE’s knowledge of Tavily, which was often outdated due to the LLM's knowledge cutoff date.

Tavily ships quickly so the LLMs models lacked the most up-to-date Tavily information and best practices. Without special knowledge of Tavily, LLM-generated code sometimes made incorrect assumptions such as coding

query=“news from CNN from last week”instead of using the proper structured parameters:query="news",include_domains="cnn.com", andtimeframe="week".

That's where Tadata came in.

The Tavily Expert MCP Server

Tavily partnered with Tadata to create a strategic MCP server that acts as a hands-on implementation assistant, giving AI IDEs direct access to current Tavily documentation, best practices, and even testing capabilities.

The MCP includes:

Direct API Access to Tavily's endpoints, so that the AI can test search requests and verify implementations work correctly.

Documentation Integration for Tavily's current documentation and best practices, ensuring the AI has up-to-date information.

Smart Onboarding Tools: Custom tools like tavily_start_tool that give the AI context about available capabilities and how to use them effectively.

Here's how it works in practice:

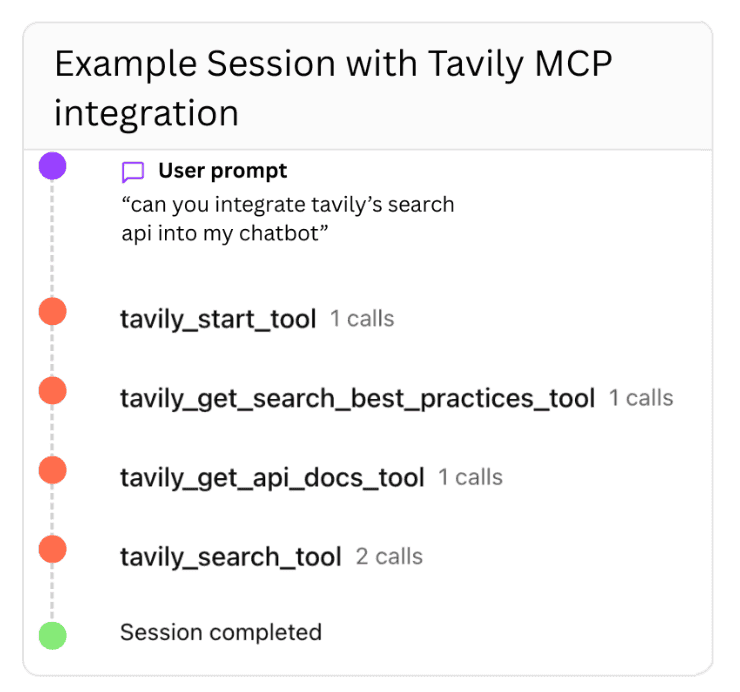

Prompt: A developer says: “can you integrate tavily’s search api into my chatbot”

AI IDE (like Cursor): Calls the start tool to learn about integration tools and resources.

Documentation Fetch: Uses the best practices tool and the API documentation tool to understand correct parameter usage and formatting.

Testing: Runs sample queries via the search tool to validate implementation.

Integration: Inserts working code directly into the user’s app!

An illustration of an example session with Tavily’s Tadata-powered MCP:

What Changed for Tavily

Tavily’s MCP server now handles thousands of calls from dozens of different MCP clients, with Cursor representing 33% of all usage. Most importantly, developers are implementing Tavily's API correctly on the first try.

Tadata brought something else to the table: detailed analytics. Tavily can now see which MCP tools are called most frequently, in what order, and where developers typically run into issues. This data help them continuously improve their MCP server and their product.

More Than an API MCP

Tavily's MCP server succeeds because it is not merely the direct translation of an API to an MCP; it provides real guidance. The combination of current documentation, testing capabilities, and smart onboarding tools means AI development assistants can implement Tavily's API correctly the first time.

For any API-first company growing through PLG, the lesson is clear: Don’t just publish docs. Make your API understandable to the AI tools your users already rely on, in an intelligent, efficient, and effective way. The Tadata way.